Algorithm

- Definition

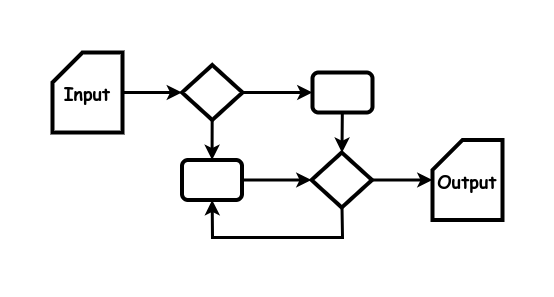

- A a finite sequence of rigorous instructions, typically used to solve a class of specific problems or to perform a computation.

How does it work?

An algorithm is any routine that solves a problem. There are well-known algorithms for common problems like search, but any code that solves a problem qualifies as an algorithm.

An algorithm is part of a system's business logic. If a known algorithm is available, use it, but make sure it has been used widely and/or been tested well. If you write your own algorithm, it's a good candidate for a unit test. If the algorithm passes the test with the most common input, and with all extreme values, you can be reasonably sure that it works well.

An algorithm should not only work, it should also be fast. Create the algorithm with real-world data in mind, or even as part of the test.

Examples

- Determine the linear trend in a series of values

- Calculate an output based on inputs and a series of operations

- Search

When should you use it?

- Whenever you need to solve a problem or just to calculate something

Problems

- An algorithm may have so many possible combinations of input that it's hard to test fully

- The algorithm may be fast enough for a small test case, but to slow for real-world applications